With the rise in popularity of streaming services such as the BBC iPlayer and Netflix , personalisation is key to engaging mass audiences with content tailored to individual preferences to enhance the interest in news, drama, and educational content as well as improve the experience of live concerts and sports events.

The way in which media experiences are produced and delivered, from television and films to video games, is rapidly evolving and enabling new forms of hyper-personalised and immersive storytelling. The recent emergence of new generative AI tools is also greatly accelerating the opportunity and need for media personalisation.

This exciting Prosperity Partnership brings together the University of Surrey, Lancaster University and the BBC to address key challenges for personalised content creation and delivery at scale using AI and Object-Based Media (OBM). The ambition is to enable media experiences which adapt to individual preferences, accessibility requirements, devices and location.

The way in which media experiences are produced, personalised and delivered is rapidly evolving, affecting streaming, television, films, video games and social platforms.

The BBC, as a world-leading broadcaster working at the cutting edge of media technology, is ideally placed to lead this partnership thanks to its access and understanding of audiences, world-leading R&D programme, a globally renowned programme archives and data for testing, and its track record for impartially bringing the industry together to lead creation and adoption of new standards. The BBC will use the partnership to create compelling personalised media experiences and AI-enabled storytelling for mass-audiences.

Surrey’s Centre for Vision, Speech and Signal Processing (CVSSP) - UK’s number one in Computer Vision research and a world-leading centre of excellence in artificial intelligence (AI) and machine perception – working alongside the recently created Surrey Institute for People-Centred AI, is leading research in AI that can transform captured audio and visual content into media objects to enable the creation and production of customisable personalised media experiences, solving problems such as scene understanding, captioning, relighting and speaker localisation.

Lancaster University’s expertise in software-defined networking is being used to develop adaptive network distribution to solve the problem of delivering personalised experiences to millions of people whilst reducing cost and increasing energy efficiency.

The Prosperity Partnership builds on a 20+ year successful research collaboration between the University of Surrey and the BBC who have pioneered broadcast technologies used worldwide, as well as long-standing research collaborations between the BBC and Lancaster University that have resulted in the development of new technologies.

Vision and ambition

Personalisation of media experiences for individual is vital for audience engagement of young and old, allowing more meaningful encounters tailored to their interest, making them part of the story, and increasing accessibility.

The Prosperity Partnership aims to position the UK at the forefront of ‘Personalised Media’ enabling the creation and delivery of new services, and positioning the UK creative industry to lead future personalised media creation and intelligent network distribution to render personalised experiences for everyone, anywhere. Leading this advance beyond streaming media to personalisation, interaction and immersion is critical for the future of the UK creative industry, opening new horizontal markets.

Evolution of mass-media audio-visual ‘broadcast’ content has moved increasingly towards Internet delivery, which, coupled with the power of AI, creates exciting potential for hyper-personalised media experiences delivered at scale to mass audiences.

Realisation of personalised experiences at scale presents three fundamental research challenges:

- Capture: Transformation of video to audio-visual objects –> Audio-Visual AI

- Production: Creation of personalised experiences –> AI for creative tools

- Delivery: Intelligent use of network resource for mass audiences –> AI for Networks

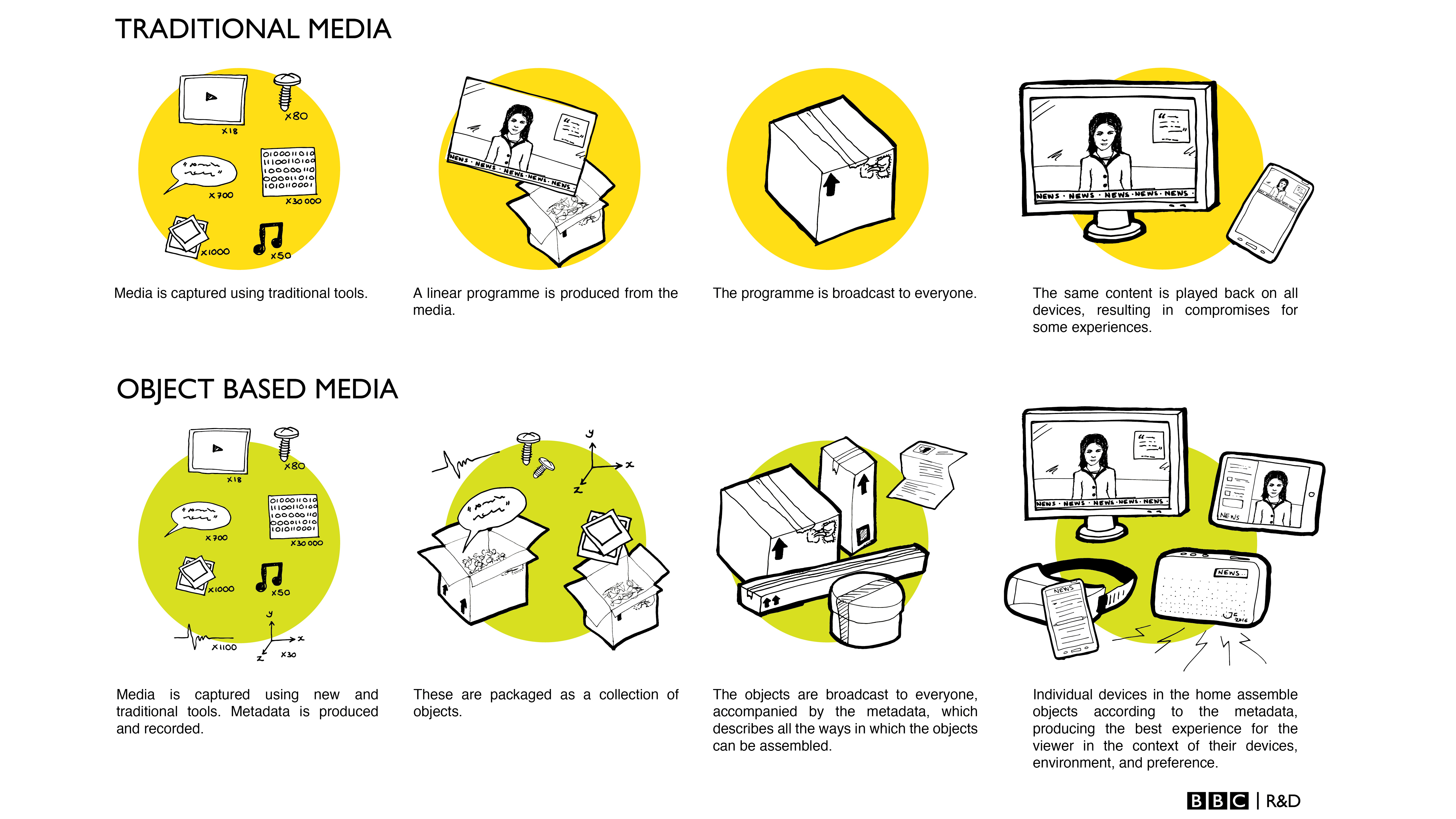

Advances in audio-visual AI for machine understanding of captured content are enabling the automatic transformation of captured 2D video streams to an object-based media (OBM) representation. OBM allows adaptation for efficient production, delivery and personalisation of the media experience whilst maintaining the perceived quality of the captured video content

To deliver personalised experiences to audiences of millions requires transformation of media processing and distribution architectures into a distributed low-latency computation platform to allow flexible deployment of OBM and compute-intensive tasks across the network. This achieves efficiency in terms of cost and energy use, while providing optimal quality of experience for the audience within the constraints of the system.

Research approach

Research that provides the solutions for personalised media requires fundamental scientific and technological advances in three key areas:

- Audio-visual AI for real-time understanding of general real-world dynamic scenes;

- Personalised Experiences at Scale: intelligent user-centred media personalisation for enhanced QoE.

- Intelligent Network compute: software defined networks for efficient utilisation of network compute and delivery resources at scale.

The Prosperity Partnership takes a holistic approach that is centred on the user’s personalised media experience, structured in five interlinked research streams:

- Stream 1: Personalised Media Experiences

- Stream 2: Object-based capture and representation of live events

- Stream 3: Intelligent Object-based Production of Personalised Experiences

- Stream 4: Intelligent Network Delivery of Personalised Experiences at Scale

- Stream 5: Business Impact

Use Cases

The research outputs from this Prosperity Partnership are being developed with a range of target use cases. Examples include:

News and Documentaries

- News tailored to the individual while maintaining integrity

- Personal interest, location, activity, device, language and time

- Personalisation to dynamically adapt content

- Intelligently prioritise information to user preferences

Drama

- Enhanced storytelling experiences

- Adapt to accessibility requirements and device

- Enhance audio/visual outputs reflecting narrative importance i.e. intelligibility of speech according to hearing ability

Live Events: Sports and Music

- Sense of ‘being there’ at a live event

- Personalised immersive content

- User-centred rendering of spatial sound and vision

- Shared experiences with friends and family

Education

- Personalisation to individual learning style and pace

- Improved education in the classroom and at home

- Interactive educational experiences that adapt to interest and level of understanding

Games

- Personalised to individual viewpoint and interaction

- Interactive storytelling

- Potential to adapt to individual interest, activity etc.

Other use cases are being formulated and explored. If you have an interesting use case, please contact us.